Encoding in Grid Cells: Uniqueness, Redundancy and Synergy

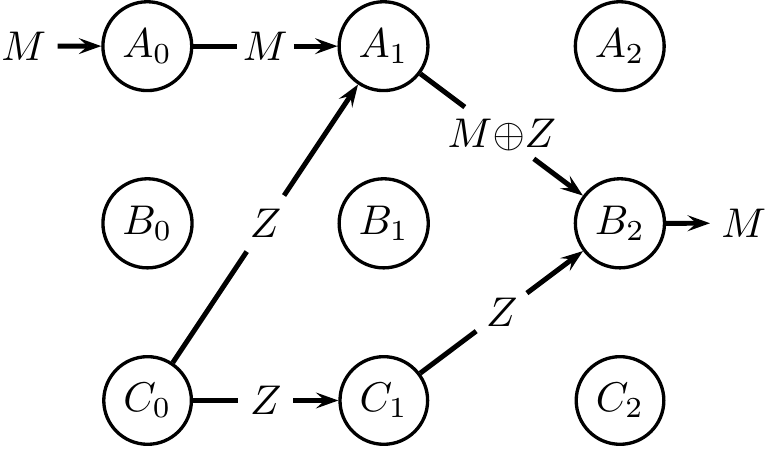

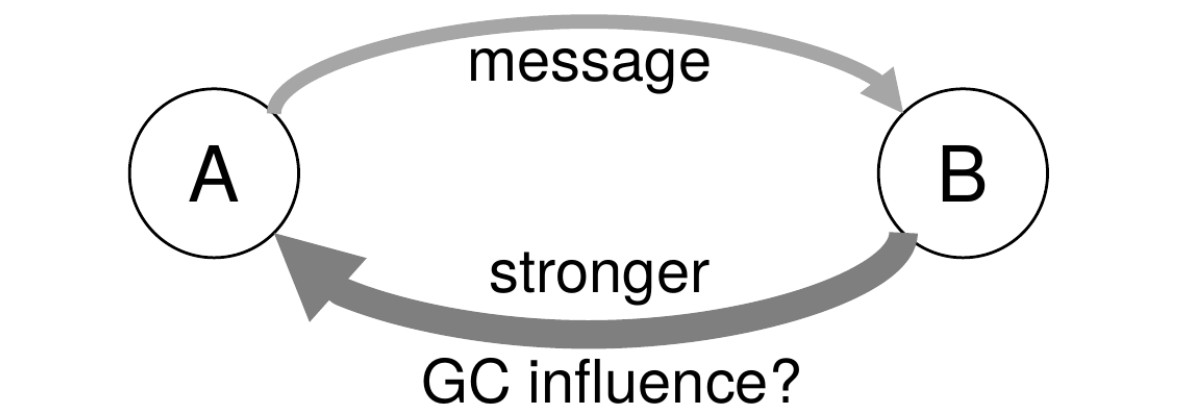

An important contribution of my earlier work on information flow lies in recognizing that accounting for synergy is essential if we want a measure of information flow that can guarantee the ability to track a message as it flows through a computational system. I presented a poster at Cosyne this year, which supports and extends my information flow result in several key directions:

- First, it demonstrates how ideas such as uniqueness, redundancy and synergy are highly relevant in neural systems, through a case study on entorhinal grid cells;

- Second, it shows how recent technical advances in information theory provide new ways to quantify uniqueness, redundancy and synergy in such systems, giving neuroscientists a new tool, and the ability to pose and answer experimental questions in a completely new way (e.g., how much of the information about an animal’s location is redundantly encoded between grid cells and place cells?);

- Thirdly, it shows that synergy can arise in unexpected ways—indeed, synergy sometimes appears when we ask a different question. We show that each grid module encodes unique information about an animal’s precise location; however, when we ask how these modules encode information about the animal’s location at a very coarse spatial scale, the same modules encode coarse location information synergistically.

This work thus highlights how synergy may be common, and that it depends on how one looks at the same information. I chose to highlight this work in spite of it being an abstract because it fits well into my future vision: I seek to discover, through collaborations, more examples of neural systems that are ripe for information-theoretic exploration.